Publications

Publications in reversed chronological order excluding collaboration papers. A complete list is available on Google Scholar

2025

-

Bayesian model selection and misspecification testing in imaging inverse problems only from noisy and partial measurementsTom Sprunck, Marcelo Pereyra, and Tobias LiaudatarXiv e-prints, Oct 2025

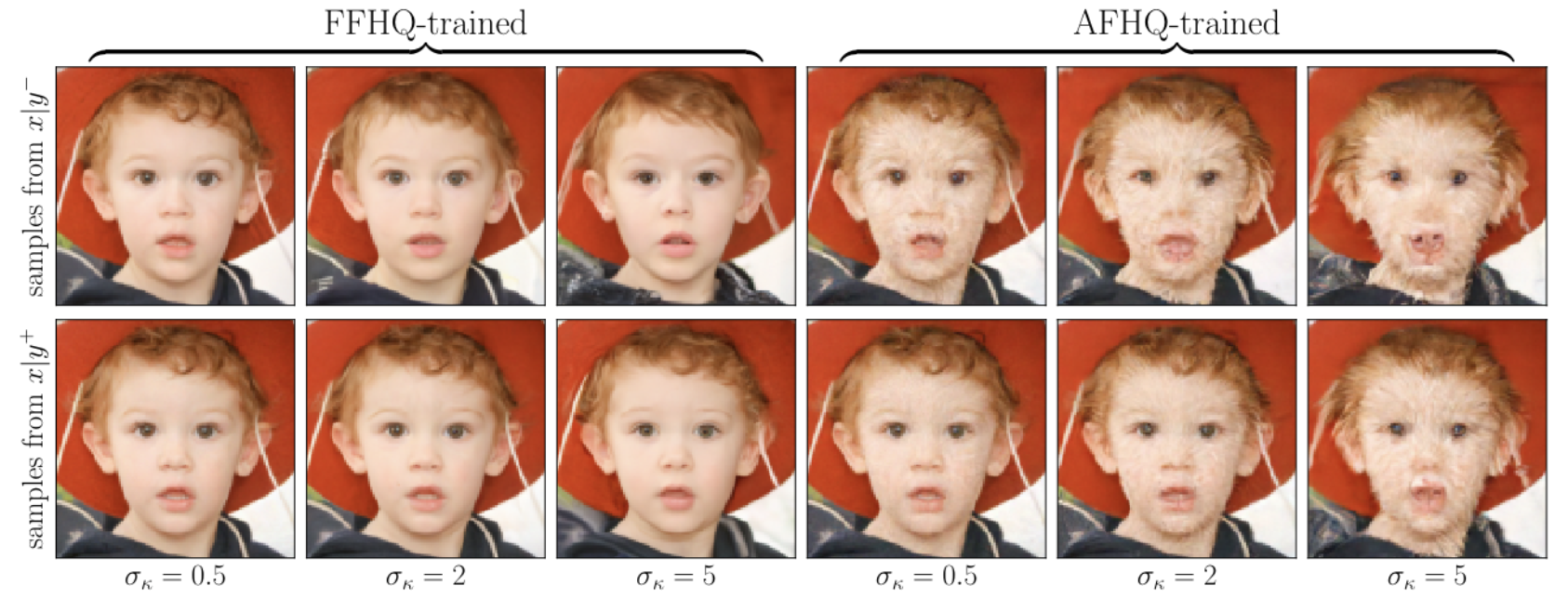

Bayesian model selection and misspecification testing in imaging inverse problems only from noisy and partial measurementsTom Sprunck, Marcelo Pereyra, and Tobias LiaudatarXiv e-prints, Oct 2025Modern imaging techniques heavily rely on Bayesian statistical models to address difficult image reconstruction and restoration tasks. This paper addresses the objective evaluation of such models in settings where ground truth is unavailable, with a focus on model selection and misspecification diagnosis. Existing unsupervised model evaluation methods are often unsuitable for computational imaging due to their high computational cost and incompatibility with modern image priors defined implicitly via machine learning models. We herein propose a general methodology for unsupervised model selection and misspecification detection in Bayesian imaging sciences, based on a novel combination of Bayesian cross-validation and data fission, a randomized measurement splitting technique. The approach is compatible with any Bayesian imaging sampler, including diffusion and plug-and-play samplers. We demonstrate the methodology through experiments involving various scoring rules and types of model misspecification, where we achieve excellent selection and detection accuracy with a low computational cost.

@article{sprunck2025, title = {Bayesian model selection and misspecification testing in imaging inverse problems only from noisy and partial measurements}, author = {Sprunck, Tom and Pereyra, Marcelo and Liaudat, Tobias}, year = {2025}, journal = {arXiv e-prints}, eprint = {2510.27663}, month = oct, archiveprefix = {arXiv}, primaryclass = {eess.IV}, url = {https://arxiv.org/abs/2510.27663} } -

UNIONS: The Ultraviolet Near-infrared Optical Northern SurveyStephen Gwyn, Alan W. McConnachie, Jean-Charles Cuillandre, and 86 more authorsThe Astronomical Journal, Nov 2025

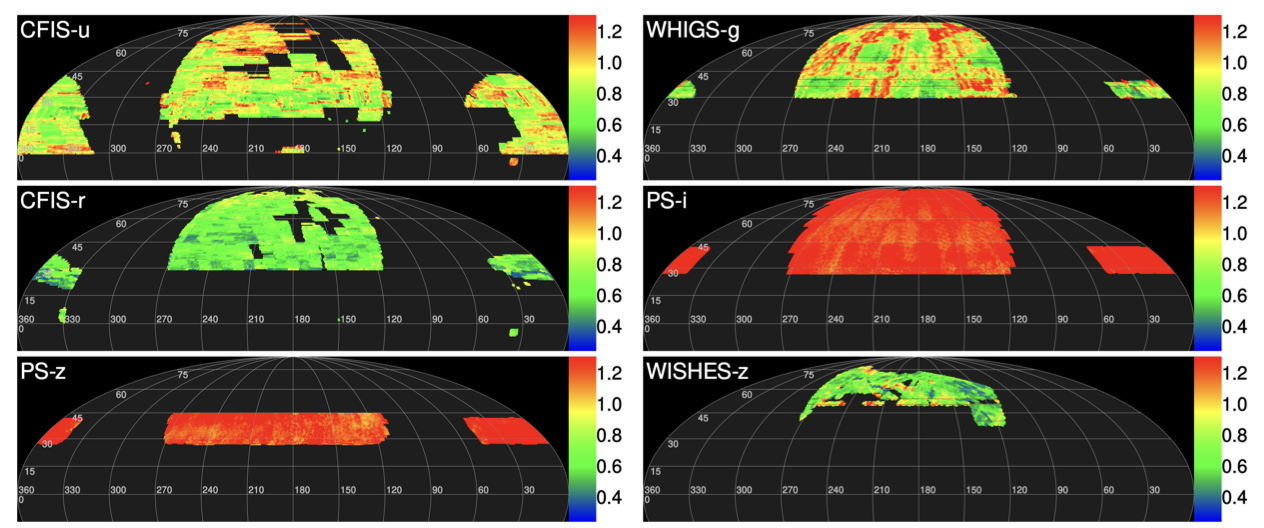

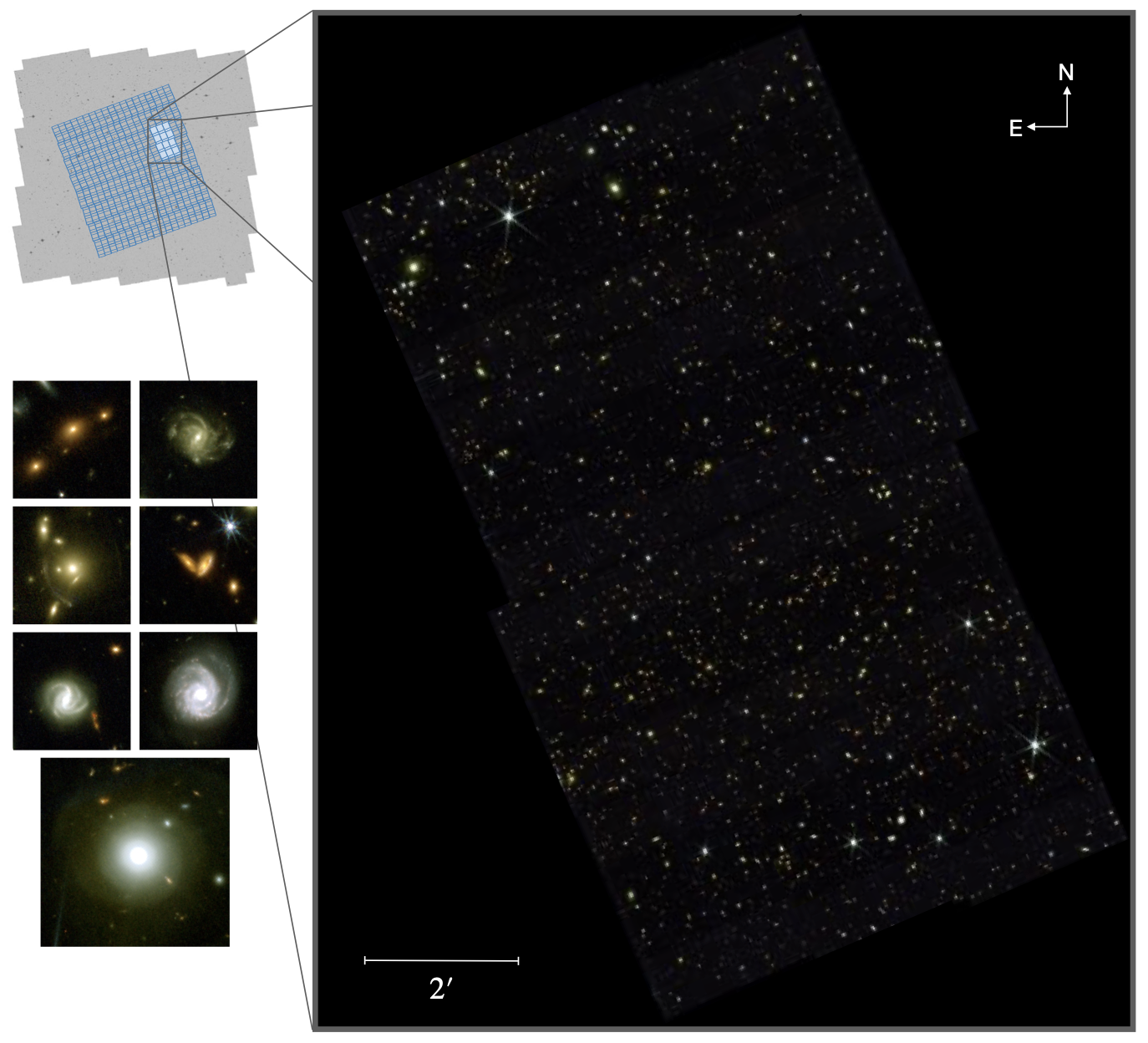

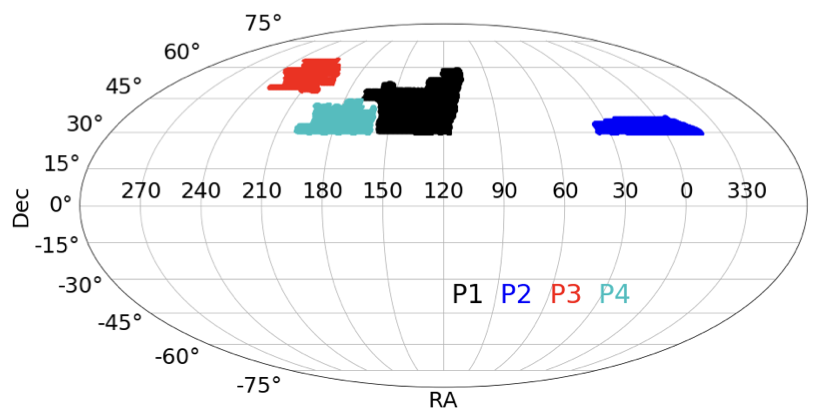

UNIONS: The Ultraviolet Near-infrared Optical Northern SurveyStephen Gwyn, Alan W. McConnachie, Jean-Charles Cuillandre, and 86 more authorsThe Astronomical Journal, Nov 2025The Ultraviolet Near-Infrared Optical Northern Survey (UNIONS) is a “collaboration of collaborations” that is using the Canada-France-Hawai’i Telescope, the Pan-STARRS telescopes, and the Subaru Observatory to obtain ugriz images of a core survey region of 6250 deg2 of the northern sky. The 10 sigma point source depth of the data, as measured within a 2″ diameter aperture, are [u, g, r, i, z] = [23.7, 24.5, 24.2, 23.8, 23.3] in AB magnitudes. UNIONS is addressing some of the most fundamental questions in astronomy, including the properties of dark matter, the growth of structure in the Universe from the very smallest galaxies to large-scale structure, and the assembly of the Milky Way. It is set to become a major ground-based legacy survey for the northern hemisphere for the next decade, and it provides an essential northern complement to the static-sky science of the Vera C. Rubin Observatory’s Legacy Survey of Space and Time. UNIONS supports the core science mission of the Euclid space mission by providing the data necessary in the northern hemisphere for the calibration of the wavelength dependence of the Euclid point-spread function and derivation of photometric redshifts in the North Galactic Cap. This region contains the highest quality sky for Euclid, with low backgrounds from the zodiacal light, stellar density, extinction, and emission from Galactic cirrus. Here, we describe the UNIONS survey components, science goals, data products, and the current status of the overall program.

@article{gwyn2025, url = {https://doi.org/10.3847/1538-3881/ae03ab}, archiveprefix = {arXiv}, eprint = {2503.13783}, year = {2025}, month = nov, publisher = {The American Astronomical Society}, volume = {170}, number = {6}, pages = {324}, author = {Gwyn, Stephen and McConnachie, Alan W. and Cuillandre, Jean-Charles and Chambers, Kenneth C. and Magnier, Eugene A. and de Boer, Thomas and Hudson, Michael J. and Oguri, Masamune and Furusawa, Hisanori and Hildebrandt, Hendrik and Carlberg, Raymond and Ellison, Sara L. and Furusawa, Junko and Gavazzi, Raphaël and Ibata, Rodrigo and Mellier, Yannick and Osato, Ken and Aussel, H. and Baumont, Lucie and Bayer, Manuel and Boulade, Olivier and Côté, Patrick and Chemaly, David and Daley, Cail and Duc, Pierre-Alain and Durret, Florence and Ellien, A. and Fabbro, Sébastien and Ferreira, Leonardo and Fitriana, Itsna K. and Le Floc’h, Emeric and Fudamoto, Yoshinobu and Gao, Hua and Goh, L. W. K. and Goto, Tomotsugu and Guerrini, Sacha and Guinot, Axel and Hénault-Brunet, Vincent and Hammer, Francois and Harikane, Yuichi and Hayashi, Kohei and Heesters, Nick and Ichikawa, Kohei and Kilbinger, Martin and Kuzma, P. B. and Li, Qinxun and Liaudat, Tobías I. and Lin, Chien-Cheng and Müller, Oliver and Martin, Nicolas F. and Matsuoka, Yoshiki and Medina, Gustavo E. and Miyatake, Hironao and Miyazaki, Satoshi and Mpetha, Charlie T. and Nagao, Tohru and Navarro, Julio F. and Niwano, Masafumi and Ogami, Itsuki and Okabe, Nobuhiro and Onoue, Masafusa and Paek, Gregory S. H. and Parker, Laura C. and Patton, David R. and Peters, Fabian Hervas and Prunet, Simon and Sánchez-Janssen, Rubén and Schultheis, M. and Sestito, Federico and Smith, Simon E. T. and Starck, J.-L. and Starkenburg, Else and Stone, Connor and Storfer, Christopher and Suzuki, Yoshihisa and Erben, T. and Taibi, Salvatore and Thomas, G. F. and Toba, Yoshiki and Uchiyama, Hisakazu and Valls-Gabaud, David and Venn, Kim A. and Van Waerbeke, Ludovic and Wainscoat, Richard J. and Wilkinson, Scott and Wittje, Anna and Yoshida, Taketo and Zhang, TianFang and Zhong, Yuxing}, title = {UNIONS: The Ultraviolet Near-infrared Optical Northern Survey}, journal = {The Astronomical Journal} } -

Generative imaging for radio interferometry with fast uncertainty quantificationMatthijs Mars, Tobías I. Liaudat, Jessica J. Whitney, and 2 more authorsarXiv e-prints, Jul 2025

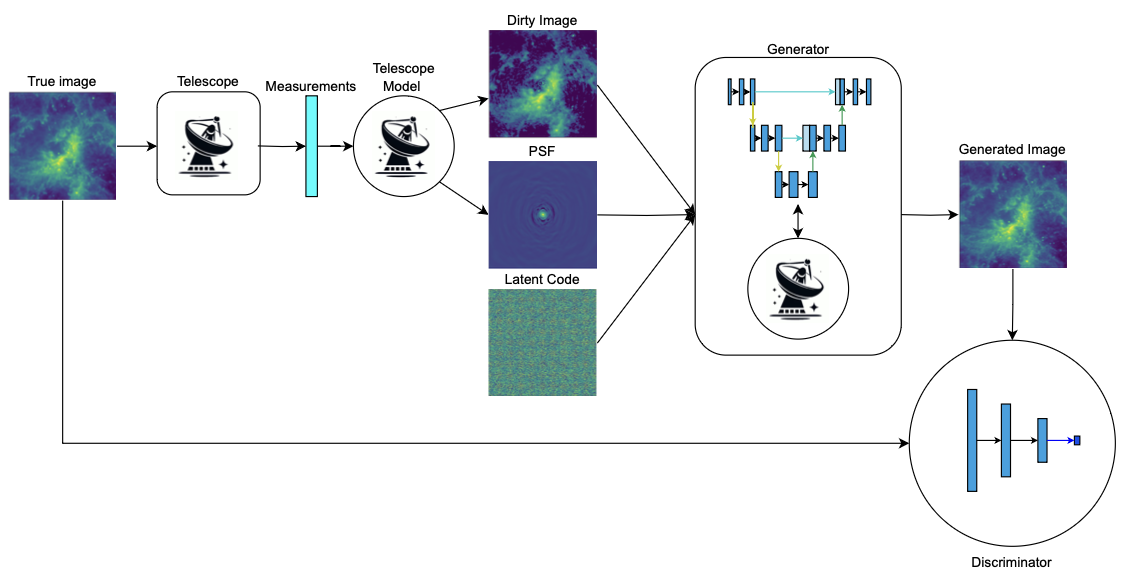

Generative imaging for radio interferometry with fast uncertainty quantificationMatthijs Mars, Tobías I. Liaudat, Jessica J. Whitney, and 2 more authorsarXiv e-prints, Jul 2025With the rise of large radio interferometric telescopes, particularly the SKA, there is a growing demand for computationally efficient image reconstruction techniques. Existing reconstruction methods, such as the CLEAN algorithm or proximal optimisation approaches, are iterative in nature, necessitating a large amount of compute. These methods either provide no uncertainty quantification or require large computational overhead to do so. Learned reconstruction methods have shown promise in providing efficient and high quality reconstruction. In this article we explore the use of generative neural networks that enable efficient approximate sampling of the posterior distribution for high quality reconstructions with uncertainty quantification. Our RI-GAN framework, builds on the regularised conditional generative adversarial network (rcGAN) framework by integrating a gradient U-Net (GU-Net) architecture - a hybrid reconstruction model that embeds the measurement operator directly into the network. This framework uses Wasserstein GANs to improve training stability in combination with regularisation terms that combat mode collapse, which are typical problems for conditional GANs. This approach takes as input the dirty image and the point spread function (PSF) of the observation and provides efficient, high-quality image reconstructions that are robust to varying visibility coverages, generalises to images with an increased dynamic range, and provides informative uncertainty quantification. Our methods provide a significant step toward computationally efficient, scalable, and uncertainty-aware imaging for next-generation radio telescopes.

@article{mars2025, author = {{Mars}, Matthijs and {Liaudat}, Tobías I. and {Whitney}, Jessica J. and {Betcke}, Marta M. and {McEwen}, Jason D.}, title = {{Generative imaging for radio interferometry with fast uncertainty quantification}}, journal = {arXiv e-prints}, keywords = {Instrumentation and Methods for Astrophysics, Machine Learning}, year = {2025}, month = jul, eid = {arXiv:2507.21270}, pages = {arXiv:2507.21270}, archiveprefix = {arXiv}, eprint = {2507.21270}, primaryclass = {astro-ph.IM}, adsurl = {https://ui.adsabs.harvard.edu/abs/2025arXiv250721270M}, adsnote = {Provided by the SAO/NASA Astrophysics Data System} } -

DeepInverse: A Python package for solving imaging inverse problems with deep learningJulián Tachella, Matthieu Terris, Samuel Hurault, and 25 more authorsarXiv e-prints, May 2025

DeepInverse: A Python package for solving imaging inverse problems with deep learningJulián Tachella, Matthieu Terris, Samuel Hurault, and 25 more authorsarXiv e-prints, May 2025DeepInverse is an open-source PyTorch-based library for solving imaging inverse problems. The library covers all crucial steps in image reconstruction from the efficient implementation of forward operators (e.g., optics, MRI, tomography), to the definition and resolution of variational problems and the design and training of advanced neural network architectures. In this paper, we describe the main functionality of the library and discuss the main design choices.

@article{tachella2025, author = {{Tachella}, Juli{\'a}n and {Terris}, Matthieu and {Hurault}, Samuel and {Wang}, Andrew and {Chen}, Dongdong and {Nguyen}, Minh-Hai and {Song}, Maxime and {Davies}, Thomas and {Davy}, Leo and {Dong}, Jonathan and {Escande}, Paul and {Hertrich}, Johannes and {Hu}, Zhiyuan and {Liaudat}, Tob{\'\i}as I. and {Laurent}, Nils and {Levac}, Brett and {Massias}, Mathurin and {Moreau}, Thomas and {Modrzyk}, Thibaut and {Monroy}, Brayan and {Neumayer}, Sebastian and {Scanvic}, J{\'e}r{\'e}my and {Sarron}, Florian and {Sechaud}, Victor and {Schramm}, Georg and {Tang}, Chao and {Vo}, Romain and {Weiss}, Pierre}, title = {{DeepInverse: A Python package for solving imaging inverse problems with deep learning}}, journal = {arXiv e-prints}, keywords = {Image and Video Processing, 65F22, 68T07, I.4.4; I.4.5; I.2.6}, year = {2025}, month = may, eid = {arXiv:2505.20160}, pages = {arXiv:2505.20160}, archiveprefix = {arXiv}, eprint = {2505.20160}, primaryclass = {eess.IV}, adsurl = {https://ui.adsabs.harvard.edu/abs/2025arXiv250520160T}, adsnote = {Provided by the SAO/NASA Astrophysics Data System} } -

Breaking the degeneracy in stellar spectral classification from single wide-band imagesEzequiel Centofanti, Samuel Farrens, Jean-Luc Starck, and 3 more authorsA&A, Jan 2025

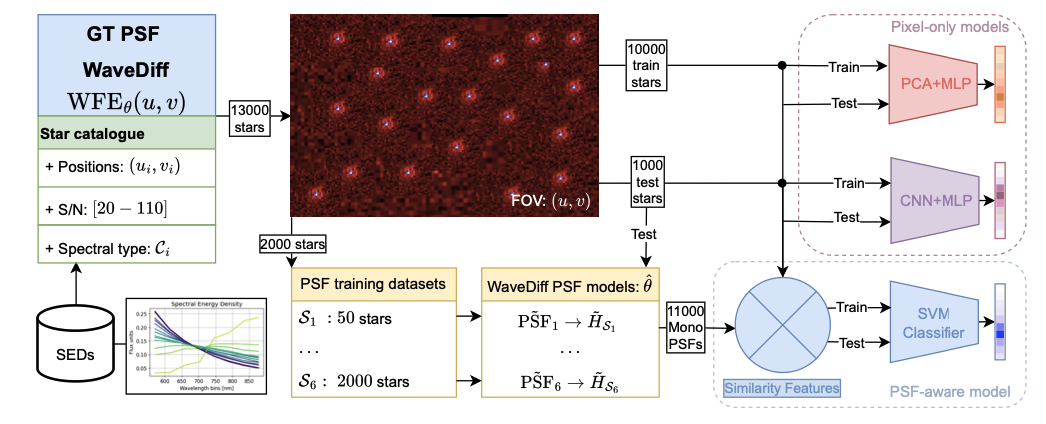

Breaking the degeneracy in stellar spectral classification from single wide-band imagesEzequiel Centofanti, Samuel Farrens, Jean-Luc Starck, and 3 more authorsA&A, Jan 2025The spectral energy distribution (SED) of observed stars in wide-field images is crucial for chromatic point spread function (PSF) modelling methods, which use unresolved stars as integrated spectral samples of the PSF across the field of view. This is particu- larly important for weak gravitational lensing studies, where precise PSF modelling is essential to get accurate shear measurements. Previous research has demonstrated that the SED of stars can be inferred from low-resolution observations using machine-learning classification algorithms. However, a degeneracy exists between the PSF size, which can vary significantly across the field of view, and the spectral type of stars, leading to strong limitations of such methods. We propose a new SED classification method that incorpo- rates stellar spectral information by using a preliminary PSF model, thereby breaking this degeneracy and enhancing the classification accuracy. Our method involves calculating a set of similarity features between an observed star and a preliminary PSF model at different wavelengths and applying a support vector machine to these similarity features to classify the observed star into a specific stellar class. The proposed approach achieves a 91% top-two accuracy, surpassing machine-learning methods that do not consider the spectral variation of the PSF. Additionally, we examined the impact of PSF modelling errors on the spectral classification accuracy.

@article{centofanti2025, author = {{Centofanti}, Ezequiel and {Farrens}, Samuel and {Starck}, Jean-Luc and {Liaudat}, Tobías I. and {Szapiro}, Alex and {Pollack}, Jennifer}, title = {{Breaking the degeneracy in stellar spectral classification from single wide-band images}}, keywords = {Astrophysics - Instrumentation and Methods for Astrophysics}, year = {2025}, month = jan, archiveprefix = {arXiv}, url = {https://doi.org/10.1051/0004-6361/202452224}, journal = {A&A}, volume = {694}, pages = {A228} } -

Cosmology from UNIONS weak lensing profiles of galaxy clustersCharlie T. Mpetha, James E. Taylor, Yuba Amoura, and 12 more authorsarXiv e-prints, Jan 2025

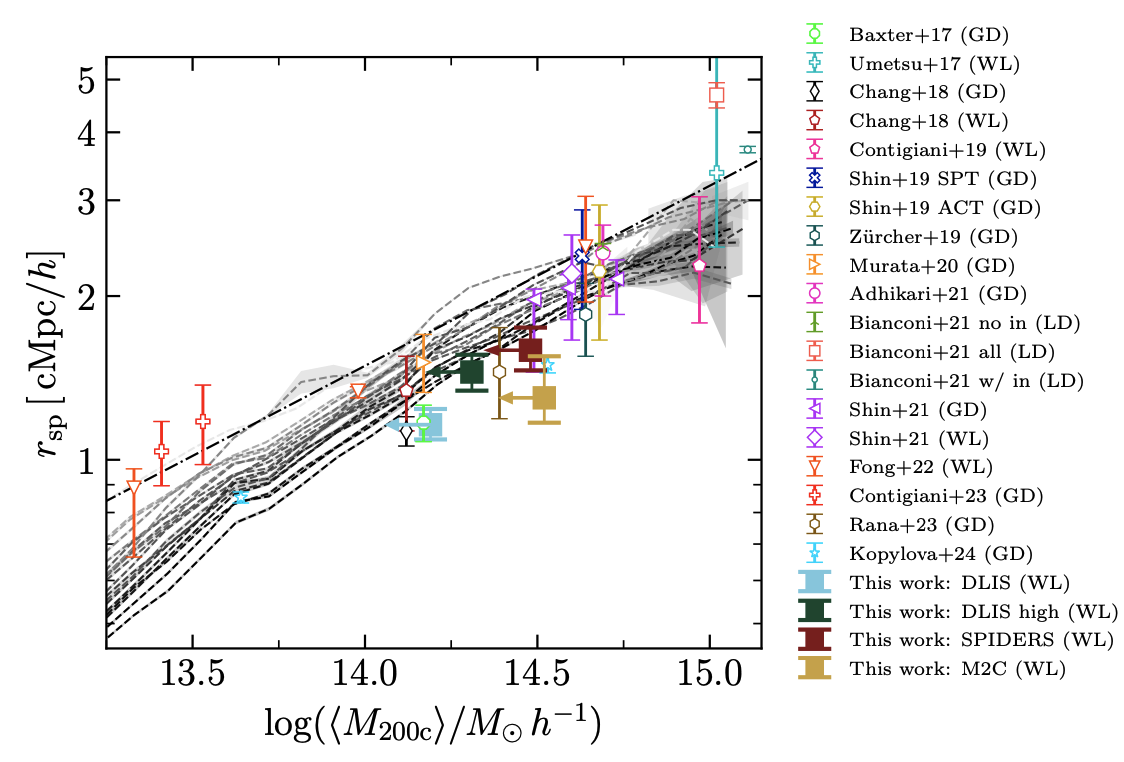

Cosmology from UNIONS weak lensing profiles of galaxy clustersCharlie T. Mpetha, James E. Taylor, Yuba Amoura, and 12 more authorsarXiv e-prints, Jan 2025Cosmological information is encoded in the structure of galaxy clusters. In Universes with less matter and larger initial density perturbations, clusters form earlier and have more time to accrete material, leading to a more extended infall region. Thus, measuring the mean mass distribution in the infall region provides a novel cosmological test. The infall region is largely insensitive to baryonic physics, and provides a cleaner structural test than other measures of cluster assembly time such as concentration. We consider cluster samples from three publicly available galaxy cluster catalogues: the Spectrsopic Identification of eROSITA Sources (SPIDERS) catalogue, the X-ray and Sunyaev-Zeldovich effect selected clusters in the meta-catalogue M2C, and clusters identified in the Dark Energy Spectroscopic Instrument (DESI) Legacy Imaging Survey. Using a preliminary shape catalogue from the Ultraviolet Near Infrared Optical Northern Survey (UNIONS), we derive excess surface mass density profiles for each sample. We then compare the mean profile for the DESI Legacy sample, which is the most complete, to predictions from a suite of simulations covering a range of Ωm and σ8, obtaining constraints of Ωm=0.29±0.05 and σ8=0.80±0.04. We also measure mean (comoving) splashback radii for SPIDERS, M2C and DESI Legacy Imaging Survey clusters of 1.59+0.16-0.13cMpc/h, 1.30+0.25-0.13cMpc/h and 1.45±0.11cMpc/h respectively. Performing this analysis with the final UNIONS shape catalogue and the full sample of spectroscopically observed clusters in DESI, we can expect to improve on the best current constraints from cluster abundance studies by a factor of 2 or more.

@article{mpetha2025, author = {{Mpetha}, Charlie T. and {Taylor}, James E. and {Amoura}, Yuba and {Haggar}, Roan and {de Boer}, Thomas and {Guerrini}, Sacha and {Guinot}, Axel and {Hervas Peters}, Fabian and {Hildebrandt}, Hendrik and {Hudson}, Michael J. and {Kilbinger}, Martin and {Liaudat}, Tobías I. and {McConnachie}, Alan and {Van Waerbeke}, Ludovic and {Wittje}, Anna}, title = {{Cosmology from UNIONS weak lensing profiles of galaxy clusters}}, journal = {arXiv e-prints}, keywords = {Astrophysics - Cosmology and Nongalactic Astrophysics}, year = {2025}, month = jan, eid = {arXiv:2501.09147}, pages = {arXiv:2501.09147}, archiveprefix = {arXiv}, primaryclass = {astro-ph.CO}, adsurl = {https://ui.adsabs.harvard.edu/abs/2025arXiv250109147M}, adsnote = {Provided by the SAO/NASA Astrophysics Data System} } -

LoFi: Neural Local Fields for Scalable Image ReconstructionAmirEhsan Khorashadizadeh, Tobías I. Liaudat, Tianlin Liu, and 2 more authorsIEEE Transactions on Computational Imaging, Aug 2025

LoFi: Neural Local Fields for Scalable Image ReconstructionAmirEhsan Khorashadizadeh, Tobías I. Liaudat, Tianlin Liu, and 2 more authorsIEEE Transactions on Computational Imaging, Aug 2025We introduce LoFi (Local Field)—a coordinate-based framework for image reconstruction which combines advantages of convolutional neural networks (CNNs) and neural fields or implicit neural representations (INRs). Unlike conventional deep neural networks, LoFi reconstructs an image one coordinate at a time, by processing only adaptive local information from the input which is relevant for the target coordinate. Similar to INRs, LoFi can efficiently recover images at any continuous coordinate, enabling image reconstruction at multiple resolutions. LoFi achieves excellent generalization to out-of-distribution data with memory usage almost independent of image resolution, while performing as well or better than standard deep learning models like CNNs and vision transformers (ViTs). Remarkably, training on 1024 x 1024 images requires less than 200MB of memory— much less than standard CNNs and ViTs. Our experiments show that Locality enables training on extremely small datasets with ten or fewer samples without overfitting and without explicit regularization or early stopping.

@article{khorashadizadeh2024, author = {{Khorashadizadeh}, AmirEhsan and {Liaudat}, Tobías I. and {Liu}, Tianlin and {McEwen}, Jason D. and {Dokmani{\'c}}, Ivan}, title = {{LoFi: Neural Local Fields for Scalable Image Reconstruction}}, journal = {IEEE Transactions on Computational Imaging}, keywords = {Image reconstruction;Inverse problems;Computed tomography;Memory management;Feature extraction;Training;Noise;Image resolution;X-ray imaging;Transformers}, year = {2025}, month = aug, eid = {arXiv:2411.04995}, pages = {1-14}, archiveprefix = {arXiv}, primaryclass = {cs.CV}, adsurl = {https://ui.adsabs.harvard.edu/abs/2024arXiv241104995K}, adsnote = {Provided by the SAO/NASA Astrophysics Data System} }

2024

-

Uncertainty quantification for fast reconstruction methods using augmented equivariant bootstrap: Application to radio interferometryMostafa Cherif, Tobías I. Liaudat, Jonathan Kern, and 2 more authorsNeurIPS 2024 workshop on Machine Learning for Physics and the Physical Sciences, Oct 2024

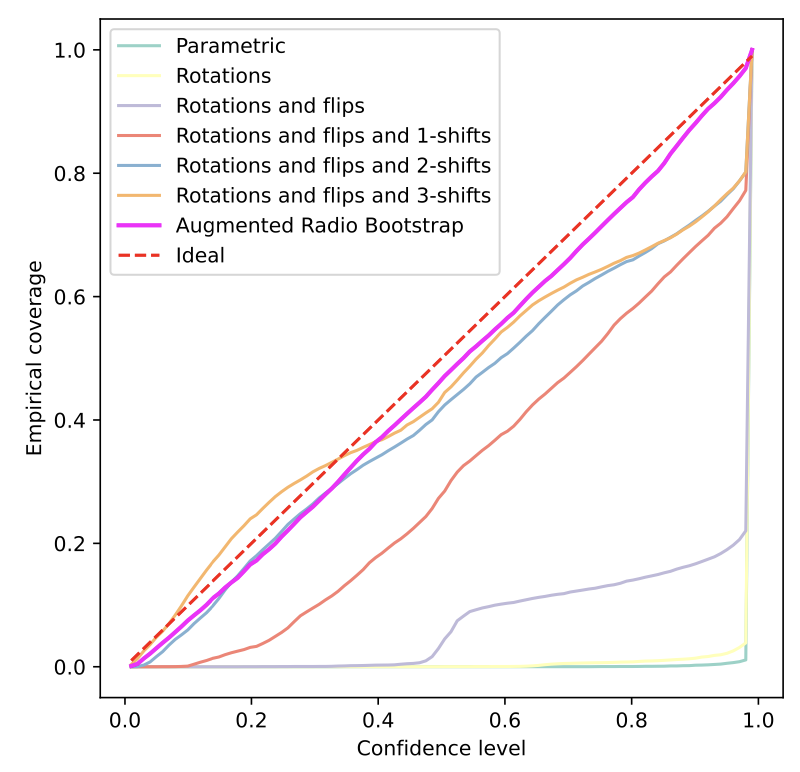

Uncertainty quantification for fast reconstruction methods using augmented equivariant bootstrap: Application to radio interferometryMostafa Cherif, Tobías I. Liaudat, Jonathan Kern, and 2 more authorsNeurIPS 2024 workshop on Machine Learning for Physics and the Physical Sciences, Oct 2024The advent of next-generation radio interferometers like the Square Kilometer Array promises to revolutionise our radio astronomy observational capabilities. The unprecedented volume of data these devices generate requires fast and accurate image reconstruction algorithms to solve the ill-posed radio interferometric imaging problem. Most state-of-the-art reconstruction methods lack trustworthy and scalable uncertainty quantification, which is critical for the rigorous scientific interpretation of radio observations. We propose an unsupervised technique based on a conformalized version of a radio-augmented equivariant bootstrapping method, which allows us to quantify uncertainties for fast reconstruction methods. Noticeably, we rely on reconstructions from ultra-fast unrolled algorithms. The proposed method brings more reliable uncertainty estimations to our problem than existing alternatives.

@article{cherif2024, author = {{Cherif}, Mostafa and {Liaudat}, Tobías I. and {Kern}, Jonathan and {Kervazo}, Christophe and {Bobin}, J{\'e}r{\^o}me}, title = {{Uncertainty quantification for fast reconstruction methods using augmented equivariant bootstrap: Application to radio interferometry}}, journal = {NeurIPS 2024 workshop on Machine Learning for Physics and the Physical Sciences}, keywords = {Astrophysics - Instrumentation and Methods for Astrophysics, Computer Science - Machine Learning}, year = {2024}, month = oct, eid = {arXiv:2410.23178}, pages = {arXiv:2410.23178}, archiveprefix = {arXiv}, eprint = {2410.23178}, url = {https://arxiv.org/abs/2410.23178}, primaryclass = {astro-ph.IM}, adsurl = {https://ui.adsabs.harvard.edu/abs/2024arXiv241023178C}, adsnote = {Provided by the SAO/NASA Astrophysics Data System} } -

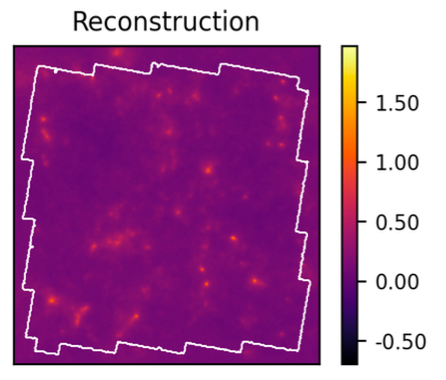

Generative modelling for mass-mapping with fast uncertainty quantificationJessica J. Whitney, Tobías I. Liaudat, Matthew A. Price, and 2 more authorsarXiv e-prints, Oct 2024

Generative modelling for mass-mapping with fast uncertainty quantificationJessica J. Whitney, Tobías I. Liaudat, Matthew A. Price, and 2 more authorsarXiv e-prints, Oct 2024Understanding the nature of dark matter in the Universe is an important goal of modern cosmology. A key method for probing this distribution is via weak gravitational lensing mass-mapping - a challenging ill-posed inverse problem where one infers the convergence field from observed shear measurements. Upcoming stage IV surveys, such as those made by the Vera C. Rubin Observatory and Euclid satellite, will provide a greater quantity and precision of data for lensing analyses, necessitating high-fidelity mass-mapping methods that are computationally efficient and that also provide uncertainties for integration into downstream cosmological analyses. In this work we introduce MMGAN, a novel mass-mapping method based on a regularised conditional generative adversarial network (GAN) framework, which generates approximate posterior samples of the convergence field given shear data. We adopt Wasserstein GANs to improve training stability and apply regularisation techniques to overcome mode collapse, issues that otherwise are particularly acute for conditional GANs. We train and validate our model on a mock COSMOS-style dataset before applying it to true COSMOS survey data. Our approach significantly outperforms the Kaiser-Squires technique and achieves similar reconstruction fidelity as alternative state-of-the-art deep learning approaches. Notably, while alternative approaches for generating samples from a learned posterior are slow (e.g. requiring 10 GPU minutes per posterior sample), MMGAN can produce a high-quality convergence sample in less than a second.

@article{whitney2024b, author = {{Whitney}, Jessica J. and {Liaudat}, Tobías I. and {Price}, Matthew A. and {Mars}, Matthijs and {McEwen}, Jason D.}, title = {{Generative modelling for mass-mapping with fast uncertainty quantification}}, journal = {arXiv e-prints}, keywords = {Astrophysics - Cosmology and Nongalactic Astrophysics, Astrophysics - Instrumentation and Methods for Astrophysics}, year = {2024}, month = oct, eid = {arXiv:2410.24197}, pages = {arXiv:2410.24197}, archiveprefix = {arXiv}, eprint = {2410.24197}, url = {https://arxiv.org/abs/2410.24197}, primaryclass = {astro-ph.CO}, adsurl = {https://ui.adsabs.harvard.edu/abs/2024arXiv241024197W}, adsnote = {Provided by the SAO/NASA Astrophysics Data System} } -

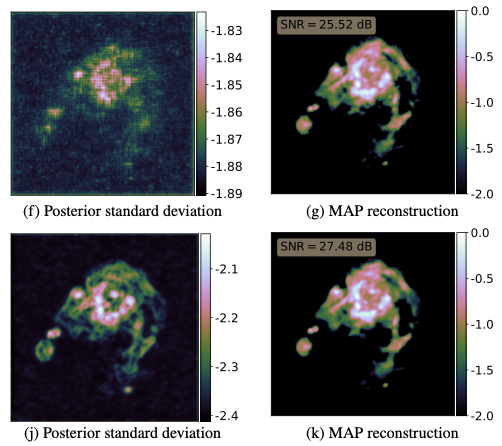

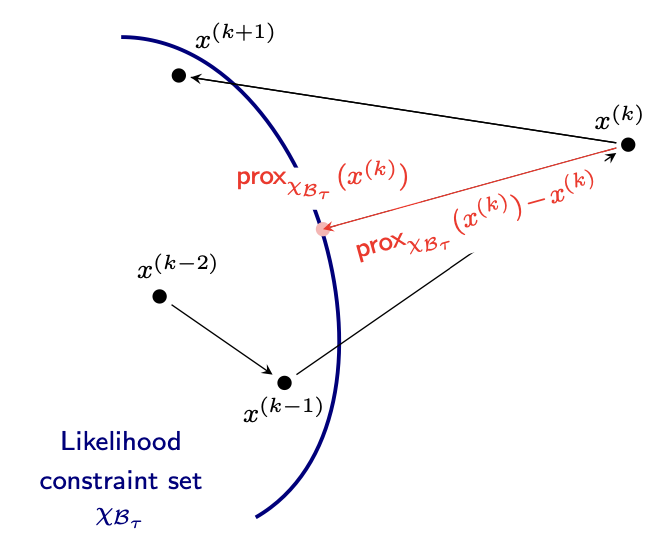

Scalable Bayesian uncertainty quantification with data-driven priors for radio interferometric imagingTobías I. Liaudat, Matthijs Mars, Matthew A. Price, and 3 more authorsRAS Techniques and Instruments, Aug 2024

Scalable Bayesian uncertainty quantification with data-driven priors for radio interferometric imagingTobías I. Liaudat, Matthijs Mars, Matthew A. Price, and 3 more authorsRAS Techniques and Instruments, Aug 2024Next-generation radio interferometers like the Square Kilometer Array have the potential to unlock scientific discoveries thanks to their unprecedented angular resolution and sensitivity. One key to unlocking their potential resides in handling the deluge and complexity of incoming data. This challenge requires building radio interferometric (RI) imaging methods that can cope with the massive data sizes and provide high-quality image reconstructions with uncertainty quantification (UQ). This work proposes a method coined quantifAI to address UQ in RI imaging with data-driven (learned) priors for high-dimensional settings. Our model, rooted in the Bayesian framework, uses a physically motivated model for the likelihood. The model exploits a data-driven convex prior potential, which can encode complex information learned implicitly from simulations and guarantee the log-concavity of the posterior. We leverage probability concentration phenomena of high-dimensional log-concave posteriors to obtain information about the posterior, avoiding MCMC sampling techniques. We rely on convex optimization methods to compute the MAP estimation, which is known to be faster and better scale with dimension than MCMC strategies. quantifAI allows us to compute local credible intervals and perform hypothesis testing of structure on the reconstructed image. We propose a novel fast method to compute pixel-wise uncertainties at different scales, which uses three and six orders of magnitude less likelihood evaluations than other UQ methods like length of the credible intervals and Monte Carlo posterior sampling, respectively. We demonstrate our method by reconstructing RI images in a simulated setting and carrying out fast and scalable UQ, which we validate with MCMC sampling. Our method shows an improved image quality and more meaningful uncertainties than the benchmark method based on a sparsity-promoting prior.

@article{liaudat2023_3, author = {{Liaudat}, Tobías I. and {Mars}, Matthijs and {Price}, Matthew A. and {Pereyra}, Marcelo and {Betcke}, Marta M. and {McEwen}, Jason D.}, title = {{Scalable Bayesian uncertainty quantification with data-driven priors for radio interferometric imaging}}, journal = {RAS Techniques and Instruments}, volume = {3}, number = {1}, pages = {505-534}, year = {2024}, month = aug, issn = {2752-8200}, url = {https://doi.org/10.1093/rasti/rzae030}, eprint = {https://academic.oup.com/rasti/article-pdf/3/1/505/59024060/rzae030.pdf} } -

Using conditional GANs for convergence map reconstruction with uncertaintiesJessica Whitney, Tobı́as I. Liaudat, Matt Price, and 2 more authorsarXiv e-prints, May 2024

Using conditional GANs for convergence map reconstruction with uncertaintiesJessica Whitney, Tobı́as I. Liaudat, Matt Price, and 2 more authorsarXiv e-prints, May 2024Understanding the large-scale structure of the Universe and unravelling the mysteries of dark matter are fundamental challenges in contemporary cosmology. Reconstruction of the cosmological matter distribution from lensing observables, referred to as ’mass-mapping’ is an important aspect of this quest. Mass-mapping is an ill-posed problem, meaning there is inherent uncertainty in any convergence map reconstruction. The demand for fast and efficient reconstruction techniques is rising as we prepare for upcoming surveys. We present a novel approach which utilises deep learning, in particular a conditional Generative Adversarial Network (cGAN), to approximate samples from a Bayesian posterior distribution, meaning they can be interpreted in a statistically robust manner. By combining data-driven priors with recent regularisation techniques, we introduce an approach that facilitates the swift generation of high-fidelity, mass maps. Furthermore, to validate the effectiveness of our approach, we train the model on mock COSMOS-style data, generated using Colombia Lensing’s kappaTNG mock weak lensing suite. These preliminary results showcase compelling convergence map reconstructions and ongoing refinement efforts are underway to enhance the robustness of our method further.

@article{whitney2024, author = {{Whitney}, Jessica and {Liaudat}, Tob{\'\i}as I. and {Price}, Matt and {Mars}, Matthijs and {McEwen}, Jason D.}, title = {{Using conditional GANs for convergence map reconstruction with uncertainties}}, journal = {arXiv e-prints}, keywords = {Astrophysics - Cosmology and Nongalactic Astrophysics, Astrophysics - Instrumentation and Methods for Astrophysics}, year = {2024}, month = may, eid = {arXiv:2406.15424}, pages = {arXiv:2406.15424}, archiveprefix = {arXiv}, eprint = {2406.15424}, primaryclass = {astro-ph.CO}, adsurl = {https://ui.adsabs.harvard.edu/abs/2024arXiv240615424W}, adsnote = {Provided by the SAO/NASA Astrophysics Data System} } -

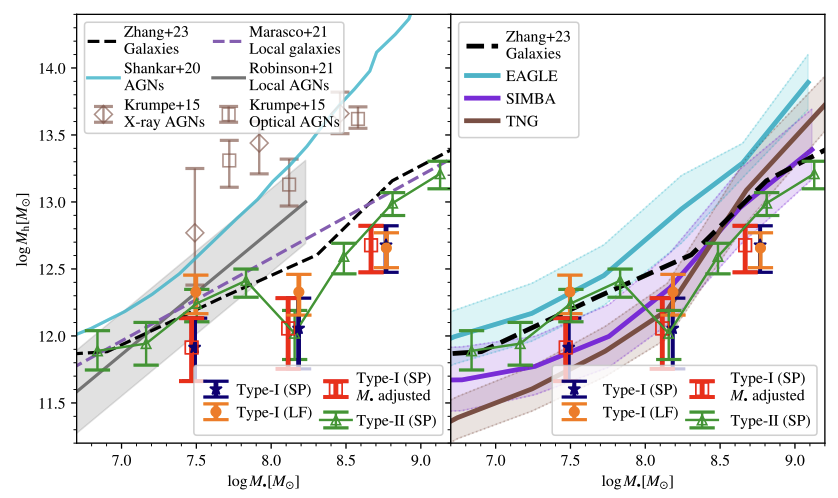

Black-Hole-to-Halo Mass Relation From UNIONS Weak LensingQinxun Li, Martin Kilbinger, Wentao Luo, and 17 more authorsThe Astrophysical Journal Letters, Jul 2024

Black-Hole-to-Halo Mass Relation From UNIONS Weak LensingQinxun Li, Martin Kilbinger, Wentao Luo, and 17 more authorsThe Astrophysical Journal Letters, Jul 2024This Letter presents, for the first time, direct constraints on the black hole-halo mass relation using weak gravitational-lensing measurements. We construct type I and type II active galactic nucleus (AGN) samples from the Sloan Digital Sky Survey, with a mean redshift of 0.4 (0.1) for type I (type II) AGNs. This sample is cross correlated with weak-lensing shear from the Ultraviolet Near Infrared Optical Northern Survey. We compute the excess surface mass density of the halos associated with 36,181 AGNs from 94,308,561 lensed galaxies and fit the halo mass in bins of black hole mass. We find that more massive AGNs reside in more massive halos. The relation between halo mass and black hole mass is well described by a power law of slope 0.6 for both type I and type II samples, in agreement with models that link black hole growth to baryon feedback. We see no dependence on AGN type or redshift in the black hole-halo mass relation below a black hole mass of 108.5 M ⊙. Above that mass, we find more massive halos for the low-z type II sample compared to the high-z type I sample, but this difference may be interpreted as systematic error in the black hole mass measurements. Our results are consistent with previous measurements for non-AGN galaxies. At a fixed black hole mass, our weak-lensing halo masses are consistent with galaxy rotation curves but significantly lower than galaxy-clustering measurements. Finally, our results are broadly consistent with state-of-the-art hydrodynamical cosmological simulations, providing a new constraint for black hole masses in simulations.

@article{li2024, title = {{Black-Hole-to-Halo Mass Relation From UNIONS Weak Lensing}}, author = {Li, Qinxun and Kilbinger, Martin and Luo, Wentao and Wang, Kai and Wang, Huiyuan and Wittje, Anna and Hildebrandt, Hendrik and van Waerbeke, Ludovic and Hudson, Michael J. and Farrens, Samuel and {Liaudat}, Tobías I. and Liu, Huiling and Zhang, Ziwen and Wang, Qingqing and Russier, Elisa and Guinot, Axel and Baumont, Lucie and Peters, Fabian Hervas and de Boer, Thomas and Wang, Jiaqi}, journal = {The Astrophysical Journal Letters}, url = {https://dx.doi.org/10.3847/2041-8213/ad58b0}, year = {2024}, month = jul, publisher = {The American Astronomical Society}, volume = {969}, number = {2}, pages = {L25}, archiveprefix = {arXiv}, primaryclass = {astro-ph.GA} }

2023

-

Proximal Nested Sampling with Data-Driven Priors for Physical ScientistsJason D. McEwen, Tobías I. Liaudat, Matthew A. Price, and 2 more authorsPhysical Sciences Forum, Jun 2023

Proximal Nested Sampling with Data-Driven Priors for Physical ScientistsJason D. McEwen, Tobías I. Liaudat, Matthew A. Price, and 2 more authorsPhysical Sciences Forum, Jun 2023Proximal nested sampling was introduced recently to open up Bayesian model selection for high-dimensional problems such as computational imaging. The framework is suitable for models with a log-convex likelihood, which are ubiquitous in the imaging sciences. The purpose of this article is two-fold. First, we review proximal nested sampling in a pedagogical manner in an attempt to elucidate the framework for physical scientists. Second, we show how proximal nested sampling can be extended in an empirical Bayes setting to support data-driven priors, such as deep neural networks learned from training data.

@article{mcewen2023, author = {{McEwen}, Jason D. and {Liaudat}, Tobías I. and {Price}, Matthew A. and {Cai}, Xiaohao and {Pereyra}, Marcelo}, title = {Proximal Nested Sampling with Data-Driven Priors for Physical Scientists}, journal = {Physical Sciences Forum}, volume = {9}, year = {2023}, month = jun, number = {1}, article-number = {13}, url = {https://www.mdpi.com/2673-9984/9/1/13}, issn = {2673-9984}, } -

Point spread function modelling for astronomical telescopes: a review focused on weak gravitational lensing studiesTobias Liaudat, Jean-Luc Starck, and Martin KilbingerFrontiers in Astronomy and Space Sciences, Jun 2023

Point spread function modelling for astronomical telescopes: a review focused on weak gravitational lensing studiesTobias Liaudat, Jean-Luc Starck, and Martin KilbingerFrontiers in Astronomy and Space Sciences, Jun 2023The accurate modelling of the point spread function (PSF) is of paramount importance in astronomical observations, as it allows for the correction of distortions and blurring caused by the telescope and atmosphere. PSF modelling is crucial for accurately measuring celestial objects’ properties. The last decades have brought us a steady increase in the power and complexity of astronomical telescopes and instruments. Upcoming galaxy surveys like Euclid and Legacy Survey of Space and Time (LSST) will observe an unprecedented amount and quality of data. Modelling the PSF for these new facilities and surveys requires novel modelling techniques that can cope with the ever-tightening error requirements. The purpose of this review is threefold. Firstly, we introduce the optical background required for a more physically motivated PSF modelling and propose an observational model that can be reused for future developments. Secondly, we provide an overview of the different physical contributors of the PSF, which includes the optic- and detector-level contributors and atmosphere. We expect that the overview will help better understand the modelled effects. Thirdly, we discuss the different methods for PSF modelling from the parametric and non-parametric families for ground- and space-based telescopes, with their advantages and limitations. Validation methods for PSF models are then addressed, with several metrics related to weak-lensing studies discussed in detail. Finally, we explore current challenges and future directions in PSF modelling for astronomical telescopes.

@article{liaudat2023_2, archiveprefix = {arXiv}, author = {{Liaudat}, Tobias and {Starck}, Jean-Luc and {Kilbinger}, Martin}, journal = {Frontiers in Astronomy and Space Sciences}, volume = {10}, issn = {2296-987X}, month = jun, pages = {arXiv:2306.07996}, primaryclass = {astro-ph.IM}, title = {{Point spread function modelling for astronomical telescopes: a review focused on weak gravitational lensing studies}}, year = {2023}, bdsk-url-1 = {https://doi.org/10.48550/arXiv.2306.07996} } -

COSMOS-Web: An Overview of the JWST Cosmic Origins SurveyCaitlin M. Casey, Jeyhan S. Kartaltepe, Nicole E. Drakos, and 83 more authorsThe Astrophysical Journal, Aug 2023

COSMOS-Web: An Overview of the JWST Cosmic Origins SurveyCaitlin M. Casey, Jeyhan S. Kartaltepe, Nicole E. Drakos, and 83 more authorsThe Astrophysical Journal, Aug 2023We present the survey design, implementation, and outlook for COSMOS-Web, a 255 hr treasury program conducted by the James Webb Space Telescope in its first cycle of observations. COSMOS-Web is a contiguous 0.54 deg2 NIRCam imaging survey in four filters (F115W, F150W, F277W, and F444W) that will reach 5σ point-source depths ranging 27.5–28.2 mag. In parallel, we will obtain 0.19 deg2 of MIRI imaging in one filter (F770W) reaching 5σ point-source depths of 25.3–26.0 mag. COSMOS-Web will build on the rich heritage of multiwavelength observations and data products available in the COSMOS field. The design of COSMOS-Web is motivated by three primary science goals: (1) to discover thousands of galaxies in the Epoch of Reionization (6 ≲ z ≲ 11) and map reionization’s spatial distribution, environments, and drivers on scales sufficiently large to mitigate cosmic variance, (2) to identify hundreds of rare quiescent galaxies at z > 4 and place constraints on the formation of the universe’s most-massive galaxies (M ⋆ > 1010 M ⊙), and (3) directly measure the evolution of the stellar-mass-to-halo-mass relation using weak gravitational lensing out to z 2.5 and measure its variance with galaxies’ star formation histories and morphologies. In addition, we anticipate COSMOS-Web’s legacy value to reach far beyond these scientific goals, touching many other areas of astrophysics, such as the identification of the first direct collapse black hole candidates, ultracool subdwarf stars in the Galactic halo, and possibly the identification of z > 10 pair-instability supernovae. In this paper we provide an overview of the survey’s key measurements, specifications, goals, and prospects for new discovery.

@article{casey2023, author = {Casey, Caitlin M. and Kartaltepe, Jeyhan S. and Drakos, Nicole E. and Franco, Maximilien and Harish, Santosh and Paquereau, Louise and Ilbert, Olivier and Rose, Caitlin and Cox, Isabella G. and Nightingale, James W. and Robertson, Brant E. and Silverman, John D. and Koekemoer, Anton M. and Massey, Richard and McCracken, Henry Joy and Rhodes, Jason and Akins, Hollis B. and Allen, Natalie and Amvrosiadis, Aristeidis and Arango-Toro, Rafael C. and Bagley, Micaela B. and Bongiorno, Angela and Capak, Peter L. and Champagne, Jaclyn B. and Chartab, Nima and {\'O}scar A. Ch{\'a}vez Ortiz and Chworowsky, Katherine and Cooke, Kevin C. and Cooper, Olivia R. and Darvish, Behnam and Ding, Xuheng and Faisst, Andreas L. and Finkelstein, Steven L. and Fujimoto, Seiji and Gentile, Fabrizio and Gillman, Steven and Gould, Katriona M. L. and Gozaliasl, Ghassem and Hayward, Christopher C. and He, Qiuhan and Hemmati, Shoubaneh and Hirschmann, Michaela and Jahnke, Knud and Jin, Shuowen and Khostovan, Ali Ahmad and Kokorev, Vasily and Lambrides, Erini and Laigle, Clotilde and Larson, Rebecca L. and Leung, Gene C. K. and Liu, Daizhong and Liaudat, Tobias and Long, Arianna S. and Magdis, Georgios and Mahler, Guillaume and Mainieri, Vincenzo and Manning, Sinclaire M. and Maraston, Claudia and Martin, Crystal L. and McCleary, Jacqueline E. and McKinney, Jed and McPartland, Conor J. R. and Mobasher, Bahram and Pattnaik, Rohan and Renzini, Alvio and Rich, R. Michael and Sanders, David B. and Sattari, Zahra and Scognamiglio, Diana and Scoville, Nick and Sheth, Kartik and Shuntov, Marko and Sparre, Martin and Suzuki, Tomoko L. and Talia, Margherita and Toft, Sune and Trakhtenbrot, Benny and Urry, C. Megan and Valentino, Francesco and Vanderhoof, Brittany N. and Vardoulaki, Eleni and Weaver, John R. and Whitaker, Katherine E. and Wilkins, Stephen M. and Yang, Lilan and Zavala, Jorge A.}, date-added = {2023-10-06 15:12:01 +0100}, date-modified = {2023-10-06 15:12:41 +0100}, journal = {The Astrophysical Journal}, month = aug, number = {1}, pages = {31}, publisher = {The American Astronomical Society}, title = {COSMOS-Web: An Overview of the JWST Cosmic Origins Survey}, url = {https://dx.doi.org/10.3847/1538-4357/acc2bc}, volume = {954}, year = {2023}, bdsk-url-1 = {https://dx.doi.org/10.3847/1538-4357/acc2bc} } -

Rethinking data-driven point spread function modeling with a differentiable optical modelTobias Liaudat, Jean-Luc Starck, Martin Kilbinger, and 1 more authorInverse Problems, Feb 2023

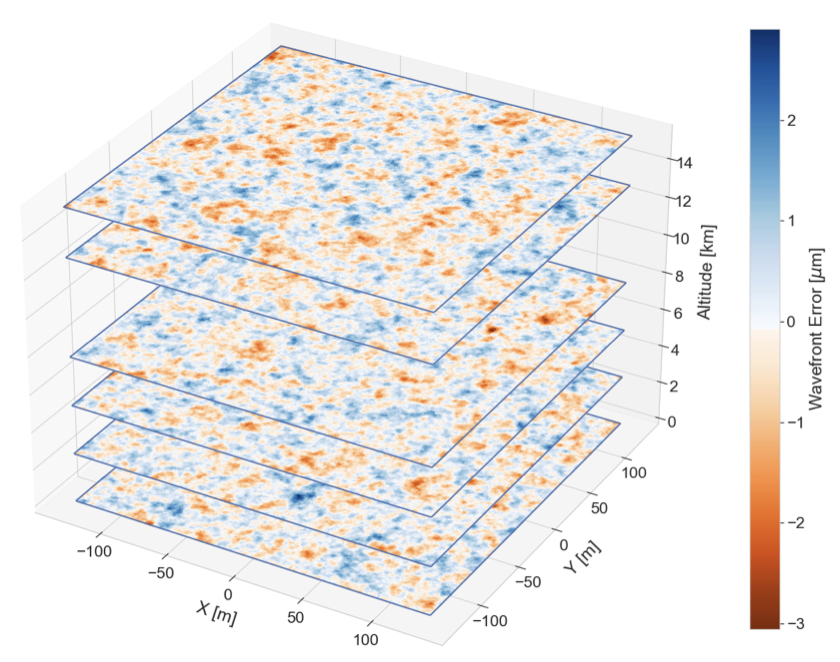

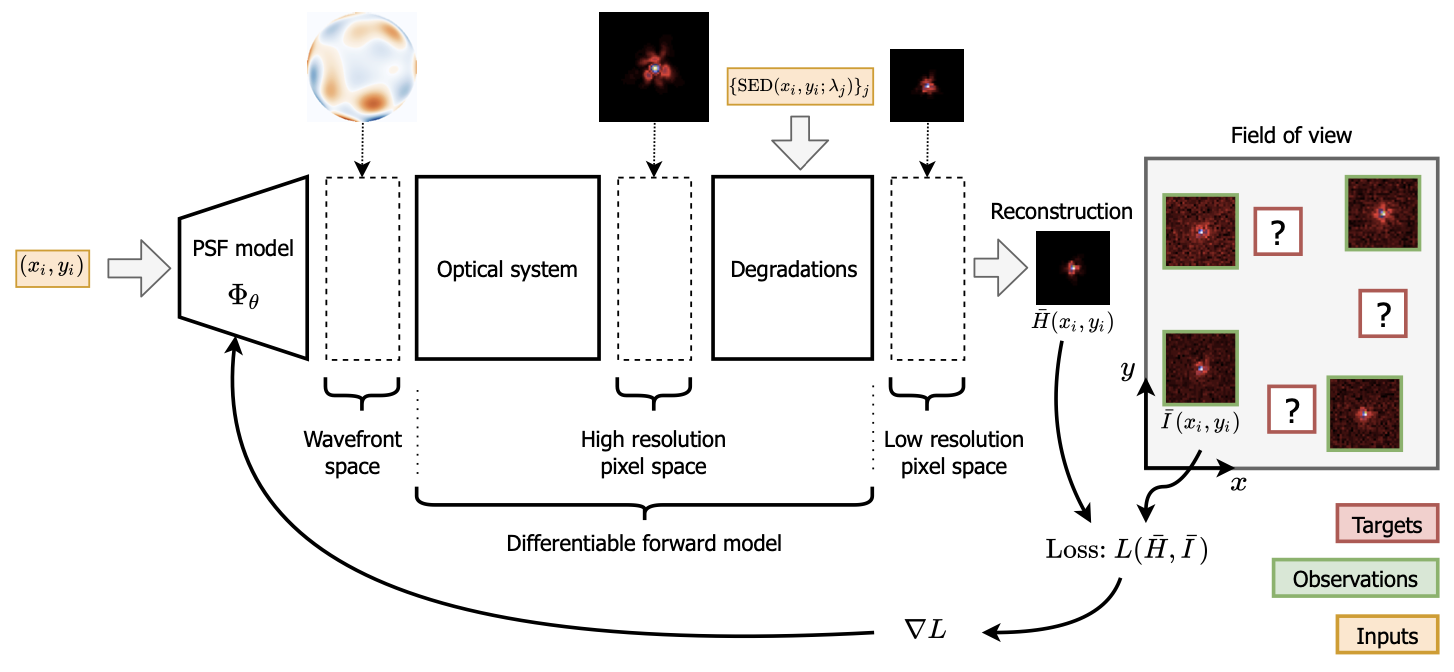

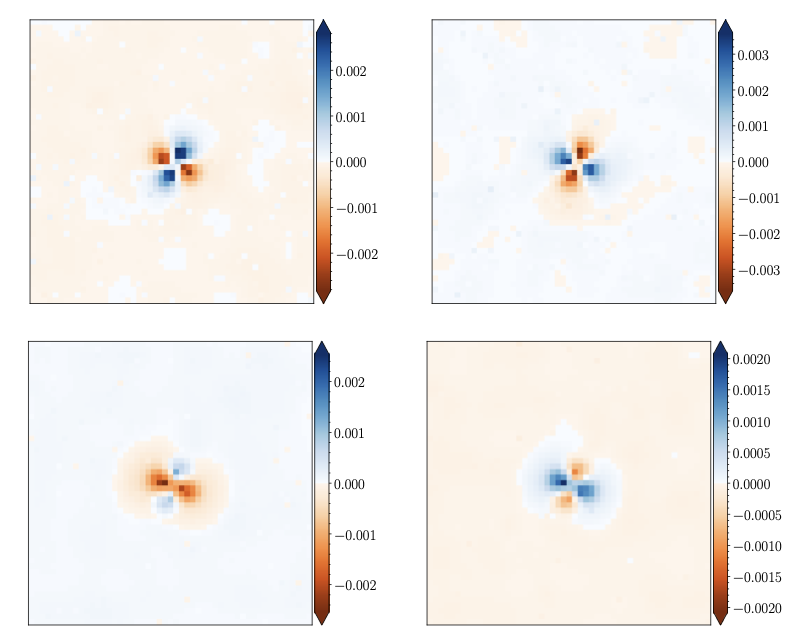

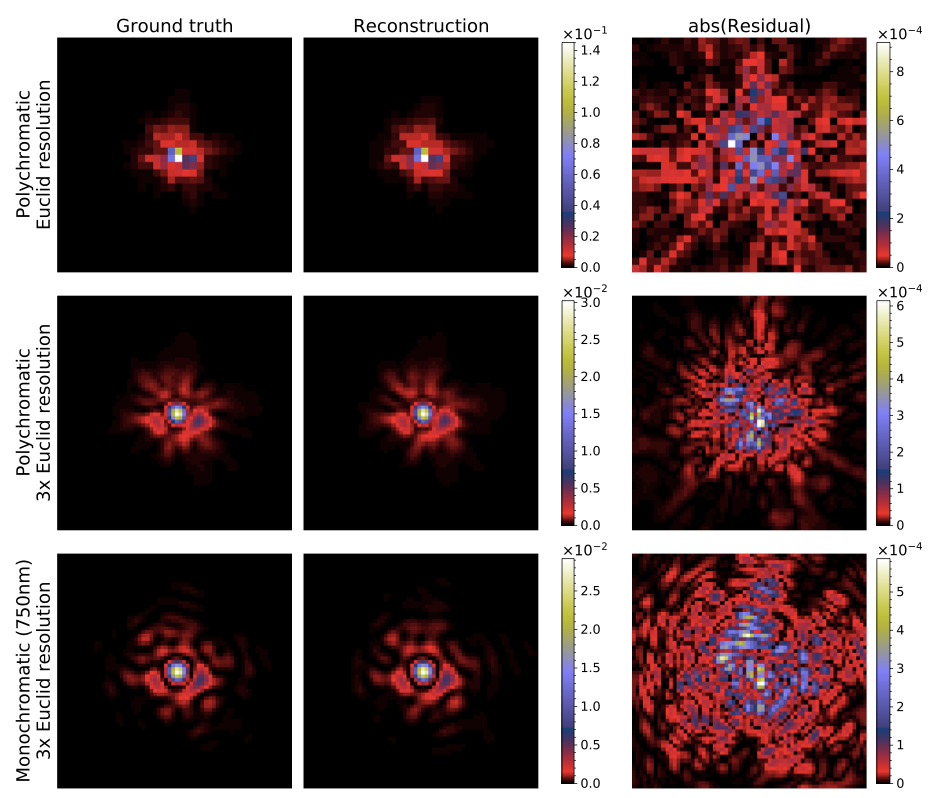

Rethinking data-driven point spread function modeling with a differentiable optical modelTobias Liaudat, Jean-Luc Starck, Martin Kilbinger, and 1 more authorInverse Problems, Feb 2023In astronomy, upcoming space telescopes with wide-field optical instruments have a spatially varying point spread function (PSF). Specific scientific goals require a high-fidelity estimation of the PSF at target positions where no direct measurement of the PSF is provided. Even though observations of the PSF are available at some positions of the field of view (FOV), they are undersampled, noisy, and integrated into wavelength in the instrument’s passband. PSF modeling represents a challenging ill-posed problem, as it requires building a model from these observations that can infer a super-resolved PSF at any wavelength and position in the FOV. Current data-driven PSF models can tackle spatial variations and super-resolution. However, they are not capable of capturing PSF chromatic variations. Our model, coined WaveDiff, proposes a paradigm shift in the data-driven modeling of the point spread function field of telescopes. We change the data-driven modeling space from the pixels to the wavefront by adding a differentiable optical forward model into the modeling framework. This change allows the transfer of a great deal of complexity from the instrumental response into the forward model. The proposed model relies on efficient automatic differentiation technology and modern stochastic first-order optimization techniques recently developed by the thriving machine-learning community. Our framework paves the way to building powerful, physically motivated models that do not require special calibration data. This paper demonstrates the WaveDiff model in a simplified setting of a space telescope. The proposed framework represents a performance breakthrough with respect to the existing state-of-the-art data-driven approach. The pixel reconstruction errors decrease six-fold at observation resolution and 44-fold for a 3x super-resolution. The ellipticity errors are reduced at least 20 times, and the size error is reduced more than 250 times. By only using noisy broad-band in-focus observations, we successfully capture the PSF chromatic variations due to diffraction. WaveDiff source code and examples associated with this paper are available at this link.

@article{liaudat2023, author = {Liaudat, Tobias and Starck, Jean-Luc and Kilbinger, Martin and Frugier, Pierre-Antoine}, date-added = {2023-10-06 15:09:53 +0100}, date-modified = {2023-10-06 15:09:53 +0100}, journal = {Inverse Problems}, month = feb, number = {3}, pages = {035008}, publisher = {IOP Publishing}, title = {Rethinking data-driven point spread function modeling with a differentiable optical model}, url = {https://dx.doi.org/10.1088/1361-6420/acb664}, volume = {39}, year = {2023}, bdsk-url-1 = {https://dx.doi.org/10.1088/1361-6420/acb664} }

2022

-

ShapePipe: A modular weak-lensing processing and analysis pipelineFarrens, S., Guinot, A., Kilbinger, M., and 8 more authorsA&A, Feb 2022

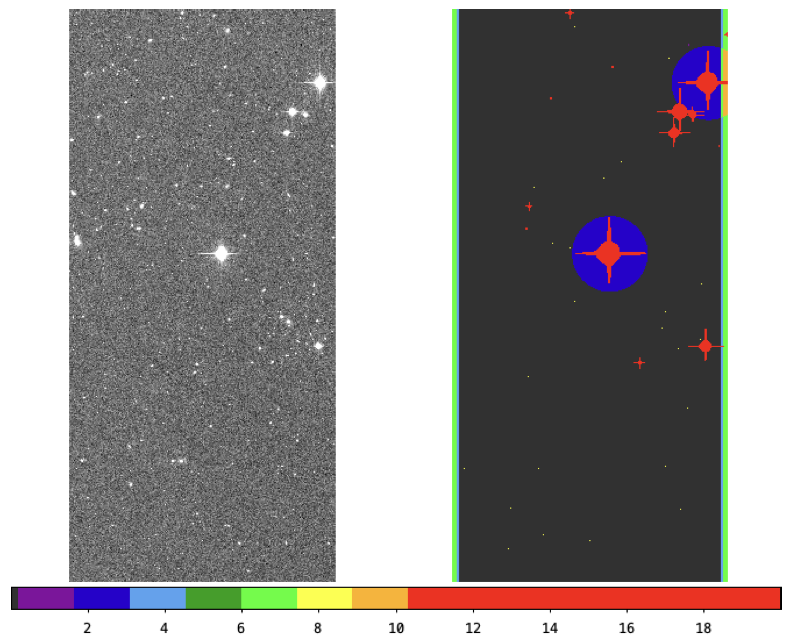

ShapePipe: A modular weak-lensing processing and analysis pipelineFarrens, S., Guinot, A., Kilbinger, M., and 8 more authorsA&A, Feb 2022We present the first public release of ShapePipe, an open-source and modular weak-lensing measurement, analysis, and validation pipeline written in Python. We describe the design of the software and justify the choices made. We provide a brief description of all the modules currently available and summarise how the pipeline has been applied to real Ultraviolet Near-Infrared Optical Northern Survey data. Finally, we mention plans for future applications and development. The code and accompanying documentation are publicly available on GitHub.

@article{farrens2022, author = {{Farrens, S.} and {Guinot, A.} and {Kilbinger, M.} and {Liaudat, T.} and {Baumont, L.} and {Jimenez, X.} and {Peel, A.} and {Pujol, A.} and {Schmitz, M.} and {Starck, J.-L.} and {Vitorelli, A. Z.}}, date-added = {2023-10-06 15:12:01 +0100}, date-modified = {2023-10-06 15:12:51 +0100}, journal = {A\&A}, pages = {A141}, title = {ShapePipe: A modular weak-lensing processing and analysis pipeline}, url = {https://doi.org/10.1051/0004-6361/202243970}, volume = {664}, year = {2022}, bdsk-url-1 = {https://doi.org/10.1051/0004-6361/202243970} } -

ShapePipe: A new shape measurement pipeline and weak-lensing application to UNIONS/CFIS dataGuinot, Axel, Kilbinger, Martin, Farrens, Samuel, and 17 more authorsA&A, Feb 2022

ShapePipe: A new shape measurement pipeline and weak-lensing application to UNIONS/CFIS dataGuinot, Axel, Kilbinger, Martin, Farrens, Samuel, and 17 more authorsA&A, Feb 2022UNIONS is an ongoing collaboration that will provide the largest deep photometric survey of the Northern sky in four optical bands to date. As part of this collaboration, CFIS is taking r-band data with an average seeing of 0.65 arcsec, which is complete to magnitude 24.5 and thus ideal for weak-lensing studies. We perform the first weak-lensing analysis of CFIS r-band data over an area spanning 1700 deg2 of the sky. We create a catalogue with measured shapes for 40 million galaxies, corresponding to an effective density of 6.8 galaxies per square arcminute, and demonstrate a low level of systematic biases. This work serves as the basis for further cosmological studies using the full UNIONS survey of 4800 deg2 when completed. Here we present ShapePipe, a newly developed weak-lensing pipeline. This pipeline makes use of state-of-the-art methods such as Ngmix for accurate galaxy shape measurement. Shear calibration is performed with metacalibration. We carry out extensive validation tests on the Point Spread Function (PSF), and on the galaxy shapes. In addition, we create realistic image simulations to validate the estimated shear. We quantify the PSF model accuracy and show that the level of systematics is low as measured by the PSF residuals. Their effect on the shear two-point correlation function is sub-dominant compared to the cosmological contribution on angular scales <100 arcmin. The additive shear bias is below 5x10−4, and the residual multiplicative shear bias is at most 10−3 as measured on image simulations. Using COSEBIs we show that there are no significant B-modes present in second-order shear statistics. We present convergence maps and see clear correlations of the E-mode with known cluster positions. We measure the stacked tangential shear profile around Planck clusters at a significance higher than 4σ.

@article{guinot2022, author = {{Guinot, Axel} and {Kilbinger, Martin} and {Farrens, Samuel} and {Peel, Austin} and {Pujol, Arnau} and {Schmitz, Morgan} and {Starck, Jean-Luc} and {Erben, Thomas} and {Gavazzi, Raphael} and {Gwyn, Stephen} and {Hudson, Michael J.} and {Hildebrandt, Hendrik} and {Tobias, Liaudat} and {Miller, Lance} and {Spitzer, Isaac} and {Van Waerbeke, Ludovic} and {Cuillandre, Jean-Charles} and {Fabbro, S\'ebastien} and {McConnachie, Alan} and {Mellier, Yannick}}, date-added = {2023-10-06 15:12:01 +0100}, date-modified = {2023-10-06 15:12:57 +0100}, journal = {A\&A}, pages = {A162}, title = {ShapePipe: A new shape measurement pipeline and weak-lensing application to UNIONS/CFIS data}, url = {https://doi.org/10.1051/0004-6361/202141847}, volume = {666}, year = {2022}, bdsk-url-1 = {https://doi.org/10.1051/0004-6361/202141847} } -

Data-driven modelling of ground-based and space-based telescope’s point spread functionsTobias Ignacio LiaudatUniversité Paris-Saclay, Oct 20222022UPASP118

Data-driven modelling of ground-based and space-based telescope’s point spread functionsTobias Ignacio LiaudatUniversité Paris-Saclay, Oct 20222022UPASP118Gravitational lensing is the distortion of the images of distant galaxies by intervening massive objects and constitutes a powerful probe of the Large Scale Structure of our Universe. Cosmologists use weak (gravitational) lensing to study the nature of dark matter and its spatial distribution. These studies require highly accurate measurements of galaxy shapes, but the telescope’s instrumental response, or point spread function (PSF), deforms our observations. This deformation can be mistaken for weak lensing effects in the galaxy images, thus being one of the primary sources of systematic error when doing weak lensing science. Therefore, estimating a reliable and accurate PSF model is crucial for the success of any weak lensing mission. The PSF field can be interpreted as a convolutional kernel that affects each of our observations of interest that varies spatially, spectrally, and temporally. The PSF model needs to cope with these variations and is constrained by specific stars in the field of view. These stars, considered point sources, provide us with degraded samples of the PSF field. The observations go through different degradations depending on the properties of the telescope, including undersampling, an integration over the instrument’s passband, and additive noise. We finally build the PSF model using these degraded observations and then use the model to infer the PSF at the position of galaxies. This procedure constitutes the ill-posed inverse problem of PSF modelling. The core of this thesis has been the development of new data-driven, also known as non-parametric, PSF models. We have developed a new PSF model for ground-based telescopes, coined MCCD, which can simultaneously model the entire focal plane. Consequently, MCCD has more available stars to constrain a more complex model. The method is based on a matrix factorisation scheme, sparsity, and an alternating optimisation procedure. We have included the PSF model in a high-performance shape measurement pipeline and used it to process 3500 deg² of r-band observations from the Canada-France Imaging Survey. A shape catalogue has been produced and will be soon released. The main goal of this thesis has been to develop a data-driven PSF model that can address the challenges raised by one of the most ambitious weak lensing missions so far, the Euclid space mission. The main difficulties related to the Euclid mission are that the observations are undersampled and integrated into a single wide passband. Therefore, it is hard to recover and model the PSF chromatic variations from such observations. Our main contribution has been a new framework for data-driven PSF modelling based on a differentiable optical forward model allowing us to build a data-driven model for the wavefront. The new model coined WaveDiff is based on a matrix factorisation scheme and Zernike polynomials. The model relies on modern gradient-based methods and automatic differentiation for optimisation, which only uses noisy broad-band in-focus observations. Results show that WaveDiff can model the PSFs’ chromatic variations and handle super-resolution with high accuracy.

@phdthesis{liaudat2022_thesis, address = {Saclay, France}, author = {Liaudat, Tobias Ignacio}, date-added = {2023-10-06 15:09:53 +0100}, date-modified = {2023-10-06 15:09:53 +0100}, month = oct, note = {2022UPASP118}, school = {Universit{\'e} Paris-Saclay}, title = {Data-driven modelling of ground-based and space-based telescope's point spread functions}, url = {http://www.theses.fr/2022UPASP118/document}, year = {2022}, bdsk-url-1 = {http://www.theses.fr/2022UPASP118/document} }

2021

-

Multi-CCD modelling of the point spread functionTobias Liaudat, Jérôme Bonnin, Jean-Luc Starck, and 4 more authorsA&A, Oct 2021

Multi-CCD modelling of the point spread functionTobias Liaudat, Jérôme Bonnin, Jean-Luc Starck, and 4 more authorsA&A, Oct 2021Context. Galaxy imaging surveys observe a vast number of objects, which are ultimately affected by the instrument’s point spread function (PSF). It is weak lensing missions in particular that are aimed at measuring the shape of galaxies and PSF effects represent an significant source of systematic errors that must be handled appropriately. This requires a high level of accuracy at the modelling stage as well as in the estimation of the PSF at galaxy positions. Aims. The goal of this work is to estimate a PSF at galaxy positions, which is also referred to as a non-parametric PSF estimation and which starts from a set of noisy star image observations distributed over the focal plane. To accomplish this, we need our model to precisely capture the PSF field variations over the field of view and then to recover the PSF at the chosen positions. Methods. In this paper, we propose a new method, coined Multi-CCD (MCCD) PSF modelling, which simultaneously creates a PSF field model over the entirety of the instrument’s focal plane. It allows us to capture global as well as local PSF features through the use of two complementary models that enforce different spatial constraints. Most existing non-parametric models build one model per charge-coupled device, which can lead to difficulties in capturing global ellipticity patterns. Results. We first tested our method on a realistic simulated dataset, comparing it with two state-of-the-art PSF modelling methods (PSFEx and RCA) and finding that our method outperforms both of them. Then we contrasted our approach with PSFEx based on real data from the Canada-France Imaging Survey, which uses the Canada-France-Hawaii Telescope. We show that our PSF model is less noisy and achieves a 22% gain on the pixel’s root mean square error with respect to PSFEx. Conclusions. We present and share the code for a new PSF modelling algorithm that models the PSF field on all the focal plane that is mature enough to handle real data.

@article{liaudat2020, author = {Liaudat, Tobias and Bonnin, J{\'e}r{\^o}me and Starck, Jean-Luc and Schmitz, Morgan A. and Guinot, Axel and Kilbinger, Martin and Gwyn, Stephen D. J.}, date-added = {2023-10-06 15:09:53 +0100}, date-modified = {2023-10-06 15:09:53 +0100}, journal = {A\&A}, pages = {A27}, title = {{Multi-CCD modelling of the point spread function}}, url = {https://doi.org/10.1051/0004-6361/202039584}, volume = {646}, year = {2021}, bdsk-url-1 = {https://doi.org/10.1051/0004-6361/202039584} } -

Rethinking the modeling of the instrumental response of telescopes with a differentiable optical modelTobias Liaudat, Jean-Luc Starck, Martin Kilbinger, and 1 more authorIn NeurIPS 2021 Machine Learning for Physical sciences workshop, Nov 2021

Rethinking the modeling of the instrumental response of telescopes with a differentiable optical modelTobias Liaudat, Jean-Luc Starck, Martin Kilbinger, and 1 more authorIn NeurIPS 2021 Machine Learning for Physical sciences workshop, Nov 2021We propose a paradigm shift in the data-driven modeling of the instrumental response field of telescopes. By adding a differentiable optical forward model into the modeling framework, we change the data-driven modeling space from the pixels to the wavefront. This allows to transfer a great deal of complexity from the instrumental response into the forward model while being able to adapt to the observations, remaining data-driven. Our framework allows a way forward to building powerful models that are physically motivated, interpretable, and that do not require special calibration data. We show that for a simplified setting of a space telescope, this framework represents a real performance breakthrough compared to existing data-driven approaches with reconstruction errors decreasing 5 fold at observation resolution and more than 10 fold for a 3x super-resolution. We successfully model chromatic variations of the instrument’s response only using noisy broad-band in-focus observations.

@inproceedings{liaudat2021, archiveprefix = {arXiv}, arxivid = {2111.12541}, author = {Liaudat, Tobias and Starck, Jean-Luc and Kilbinger, Martin and Frugier, Pierre-Antoine}, booktitle = {NeurIPS 2021 Machine Learning for Physical sciences workshop}, date-added = {2023-10-06 15:09:53 +0100}, date-modified = {2023-10-06 15:09:53 +0100}, eprint = {2111.12541}, journal = {arXiv:2111.12541}, month = nov, title = {{Rethinking the modeling of the instrumental response of telescopes with a differentiable optical model}}, url = {http://arxiv.org/abs/2111.12541}, year = {2021}, bdsk-url-1 = {http://arxiv.org/abs/2111.12541} } -

Semi-Parametric Wavefront Modelling for the Point Spread FunctionTobias Liaudat, Jean-Luc Starck, and Martin KilbingerIn 52ème Journées de Statistique de la Société Française de Statistique (SFdS), Jun 2021

Semi-Parametric Wavefront Modelling for the Point Spread FunctionTobias Liaudat, Jean-Luc Starck, and Martin KilbingerIn 52ème Journées de Statistique de la Société Française de Statistique (SFdS), Jun 2021We introduce a new approach to estimate the point spread function (PSF) field of an optical telescope by building a semi-parametric model of its wavefront error. This method is particularly advantageous because it does not require calibration observa- tions to recover the wavefront error and it naturally takes into account the chromaticity of the optical system. The model is end-to-end differentiable and relies on a diffraction operator that allows us to compute monochromatic PSFs from the wavefront information.

@inproceedings{liaudat2021_b, address = {Nice, France}, author = {Liaudat, Tobias and Starck, Jean-Luc and Kilbinger, Martin}, booktitle = {{52{\`e}me Journ{\'e}es de Statistique de la Soci{\'e}t{\'e} Fran{\c c}aise de Statistique (SFdS)}}, date-added = {2023-10-06 15:09:53 +0100}, date-modified = {2023-10-06 15:09:53 +0100}, hal_id = {hal-03444576}, hal_version = {v1}, keywords = {Point Spread Function Modelling ; Image Processing ; Optics ; Weak Lensing}, month = jun, title = {{Semi-Parametric Wavefront Modelling for the Point Spread Function}}, year = {2021}, bdsk-url-1 = {https://hal.archives-ouvertes.fr/hal-03444576} }

2020

-

Faster and better sparse blind source separation through mini-batch optimizationChristophe Kervazo, Tobias Liaudat, and Jérôme BobinDigital Signal Processing, Jun 2020

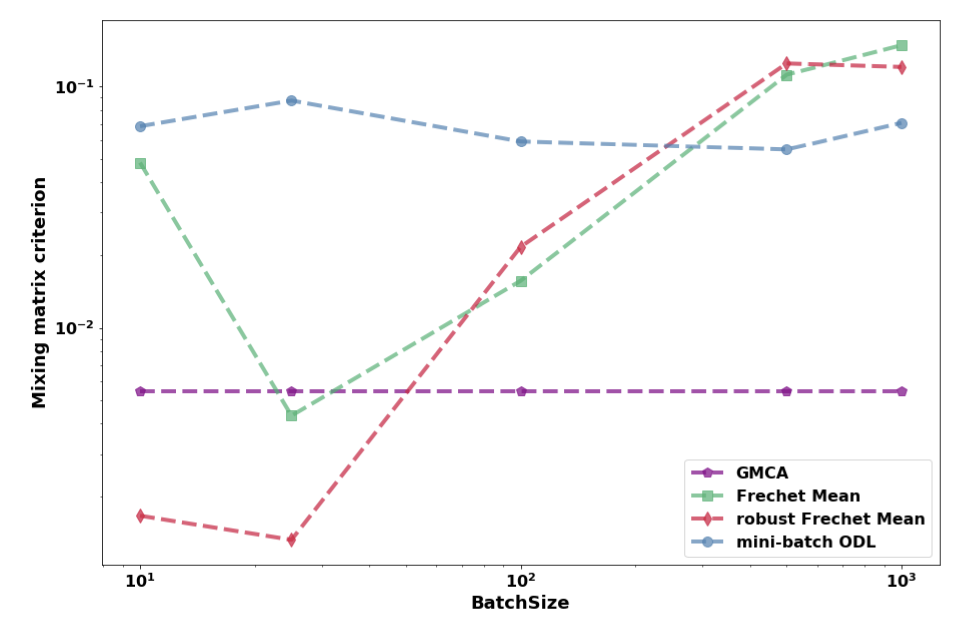

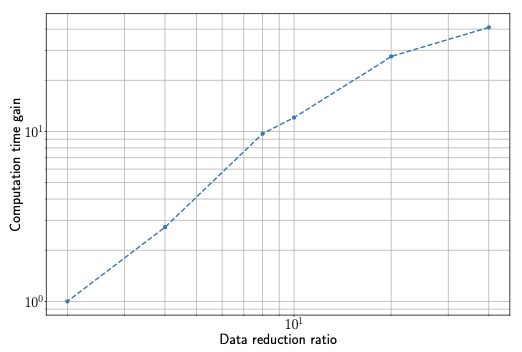

Faster and better sparse blind source separation through mini-batch optimizationChristophe Kervazo, Tobias Liaudat, and Jérôme BobinDigital Signal Processing, Jun 2020Sparse Blind Source Separation (sBSS) plays a key role in scientific domains as different as biomedical imaging, remote sensing or astrophysics. Such fields however require the development of increasingly faster and scalable BSS methods without sacrificing the separation performances. To that end, we introduce in this work a new distributed sparse BSS algorithm based on a mini-batch extension of the Generalized Morphological Component Analysis algorithm (GMCA). Precisely, it combines a robust projected alternated least-squares method with mini-batch optimization. The originality further lies in the use of a manifold-based aggregation of the asynchronously estimated mixing matrices. Numerical experiments are carried out on realistic spectroscopic spectra, and highlight the ability of the proposed distributed GMCA (dGMCA) to provide very good separation results even when very small mini-batches are used. Quite unexpectedly, the algorithm can further outperform the (non-distributed) state-of-the-art methods for highly sparse sources.

@article{kervazo2020, author = {Kervazo, Christophe and Liaudat, Tobias and Bobin, J{\'e}r{\^o}me}, date-added = {2023-10-06 15:09:53 +0100}, date-modified = {2023-10-06 15:16:08 +0100}, issn = {1051-2004}, journal = {Digital Signal Processing}, keywords = {Blind source separation, Sparse representations, Mini-batches optimization, Matrix factorization}, pages = {102827}, title = {Faster and better sparse blind source separation through mini-batch optimization}, url = {http://www.sciencedirect.com/science/article/pii/S105120042030172X}, volume = {106}, year = {2020}, bdsk-url-1 = {http://www.sciencedirect.com/science/article/pii/S105120042030172X}, bdsk-url-2 = {https://doi.org/10.1016/j.dsp.2020.102827} }

2019

-

Distributed sparse BSS for large-scale datasetsTobias Liaudat, Jérôme Bobin, and Christophe KervazoIn 2019 SPARS conference proceedings, Apr 2019

Distributed sparse BSS for large-scale datasetsTobias Liaudat, Jérôme Bobin, and Christophe KervazoIn 2019 SPARS conference proceedings, Apr 2019Blind Source Separation (BSS) [1] is widely used to analyze multichannel data stemming from origins as wide as astrophysics to medicine. However, existent methods do not efficiently handle very large datasets. In this work, we propose a new method coined DGMCA (Distributed Generalized Morphological Component Analysis) in which the original BSS problem is decomposed into subproblems that can be tackled in parallel, alleviating the large-scale issue. We propose to use the RCM (Riemannian Center of Mass - [6][7]) to aggregate during the iterative process the estimations yielded by the different subproblems. The approach is made robust both by a clever choice of the weights of the RCM and the adaptation of the heuristic parameter choice proposed in [4] to the parallel framework. The results obtained show that the proposed approach is able to handle large-scale problems with a linear acceleration performing at the same level as GMCA and maintaining an automatic choice of parameters.

@inproceedings{liaudat2019, author = {Liaudat, Tobias and Bobin, J{\'e}r{\^o}me and Kervazo, Christophe}, booktitle = {2019 SPARS conference proceedings}, date-added = {2023-10-06 15:09:53 +0100}, date-modified = {2023-10-06 15:09:53 +0100}, hal_id = {hal-02088466}, hal_version = {v1}, month = apr, title = {{Distributed sparse BSS for large-scale datasets}}, url = {https://hal.archives-ouvertes.fr/hal-02088466}, year = {2019}, bdsk-url-1 = {https://hal.archives-ouvertes.fr/hal-02088466} }